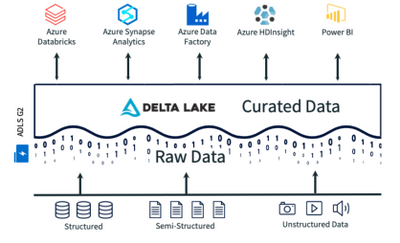

this article was first published on search technologies' blog . we really are at the start of a long and exciting journey! In this scenario, data engineers must spend time and energy deleting any corrupted data, checking the remainder of the data for correctness, and setting up a new write job to fill any holes in the data. A data lake is a central location that holds a large amount of data in its native, raw format. Data warehousesemerged as a technology that brings together an organizations collection of relational databases under a single umbrella, allowing the data to be queried and viewed as a whole. To make big data analytics possible, and to address concerns about the cost and vendor lock-in of data warehouses, Apache Hadoop emerged as an open source distributed data processing technology. Data processing and continuous data engineering, Data governance - Discoverability, Security and Compliance. A centralized data lake eliminates problems with data silos (like data duplication, multiple security policies and difficulty with collaboration), offering downstream users a single place to look for all sources of data. See the original article here.

These issues can stem from difficulty combining batch and streaming data, data corruption and other factors. Different types of data access and tooling are supported. data lakes will have tens of thousands of tables/files and billions of records. for example, they can analyze how much product is produced based on raw material, labor, and site characteristics are taken into account. It works hand-in-hand with the MapReduce algorithm, which determines how to split up a large computational task (like a statistical count or aggregation) into much smaller tasks that can be run in parallel on a computing cluster. Delta Lakeuses caching to selectively hold important tables in memory, so that they can be recalled quicker. Delta Lakeuses Spark to offer scalable metadata management that distributes its processing just like the data itself. Laws such as GDPR and CCPA require that companies are able to delete all data related to a customer if they request it. Companies need to be able to: Delta Lakesolves this issue by enabling data analysts to easily query all the data in their data lake using SQL. there may be a licensing limit to the original content source that prevents some users from getting their own credentials. However, they are now available with the introduction of open source Delta Lake, bringing the reliability and consistency of data warehouses to data lakes. Until recently, ACID transactions have not been possible on data lakes. knowing historical data from different locations can increase sizeand quality of a yield. Raw data can be retained indefinitely at low cost for future use in machine learning and analytics. Data lakes also make it challenging to keep historical versions of data at a reasonable cost, because they require manual snapshots to be put in place and all those snapshots to be stored. Learn why Databricks was named a Leader and how the lakehouse platform delivers on both your data warehousing and machine learning goals. Companies often built multiple databases organized by line of business to hold the data instead.

When properly architected, data lakes enable the ability to: Data lakes allow you to transform raw data into structured data that is ready for SQL analytics, data science and machine learning with low latency.

ACID properties(atomicity, consistency, isolation and durability) are properties of database transactions that are typically found in traditional relational database management systems systems (RDBMSes). bio-pharmais a heavily regulated industry, so security and following industry standard practices on experiments is a critical requirement. Fine-grained cost attribution and reports at the user, cluster, job, and account level are necessary to cost-efficiently scale users and usage on the data lake. Hortonworks was the only distributor to provide open source Hadoop distribution i.e. SQL is the easiest way to implement such a model, given its ubiquity and easy ability to filter based upon conditions and predicates. ClouderaandHortonworkshave merged now. Since its introduction, Sparks popularity has grown and grown, and it has become the de facto standard for big data processing, in no small part due to a committed base of community members and dedicated open source contributors. Check our our website to learn more ortry Databricks for free. For many years, relational databases were sufficient for companies needs: the amount of data that needed to be stored was relatively small, and relational databases were simple and reliable. It can be hard to find data in the lake. With the rise of the internet, companies found themselves awash in customer data. Unlike most databases and data warehouses, data lakes can process all data types including unstructured and semi-structured data like images, video, audio and documents which are critical for todays machine learning and advanced analytics use cases. Without a way to centralize and synthesize their data, many companies failed to synthesize it into actionable insights. We can use HDFS as raw storage area. at search technologies, were using big data architectures to improve search and analytics, and were helping organizations do amazing things as a result. Data lakes are incredibly flexible, enabling users with completely different skills, tools and languages to perform different analytics tasks all at once. Some early data lakes succeeded, while others failed due to Hadoops complexity and other factors. Learn more about Delta Lake. multiple user interfaces are being created to meet the needs of the various user communities. A Data Lake Architecture With Hadoop and Open Source Search Engines. Ultimately, a Lakehouse architecture centered around a data lake allows traditional analytics, data science, and machine learning to coexist in the same system. Even cleansing the data of null values, for example, can be detrimental to good data scientists, who can seemingly squeeze additional analytical value out of not just data, but even the lack of it. Cloud providers support methods to map the corporate identity infrastructure onto the permissions infrastructure of the cloud providers resources and services. the enterprise data lake and big data architectures are built on cloudera, which collects and processes all the raw data in one place, and then indexes that data into a cloudera search, impala, and hbase for a unified search and analytics experience for end-users. A lakehouse enables a wide range of new use cases for cross-functional enterprise-scale analytics, BI and machine learning projects that can unlock massive business value. there are many different departments within these organizations and employees have access to many different content sources from different business systems stored all over the world. governance and security are still top-of-mind as key challenges and success factors for the data lake. At first, data warehouses were typically run on expensive, on-premises appliance-based hardware from vendors like Teradata and Vertica, and later became available in the cloud. Data lakes are often used to consolidate all of an organizations data in a single, central location, where it can be saved as is, without the need to impose a schema (i.e., a formal structure for how the data is organized) up front like a data warehouse does. With Delta Lake, customers can build a cost-efficient, highly scalable lakehouse that eliminates data silos and provides self-serving analytics to end users. Some of the major performance bottlenecks that can occur with data lakes are discussed below. It is the primary way that downstream consumers (for example, BI and data analysts) can discover what data is available, what it means, and how to make use of it. Join the DZone community and get the full member experience. Spark also made it possible to train machine learning models at scale, query big data sets using SQL, and rapidly process real-time data with Spark Streaming, increasing the number of users and potential applications of the technology significantly. being able to search and analyze their data more effectively will lead to improvements in areas such as: drug production trends looking for trends or drift in batches of drugs or raw materials which would indicate potential future problems (instrument calibration, raw materials quality, etc.) For business intelligence reports, SQL is the lingua franca and runs on aggregated datasets in the data warehouse and also the data lake. With the increasing amount of data that is collected in real time, data lakes need the ability to easily capture and combine streaming data with historical, batch data so that they can remain updated at all times. which should be addressed. Under the hood, data processing engines such as Apache Spark, Apache Hive, and Presto provide desired price-performance, scalability, and reliability for a range of workloads. Today, many modern data lake architectures use Spark as the processing engine that enables data engineers and data scientists to perform ETL, refine their data, and train machine learning models. For proper query performance, the data lake should be properly indexed and partitioned along the dimensions by which it is most likely to be grouped. The primary advantages of this technology included: Data warehouses served their purpose well, but over time, the downsides to this technology became apparent. There is no in between, which is good because the state of your data lake can be kept clean. Without a data catalog, users can end up spending the majority of their time just trying to discover and profile datasets for integrity before they can trust them for their use case. You should review access control permissions periodically to ensure they do not become stale.

where necessary, content will be analyzed and results will be fed back to users via search to a multitude of uis across various platforms. Use data catalog and metadata management tools at the point of ingestion to enable self-service data science and analytics. Build reliability and ACID transactions , Delta Lake: Open Source Reliability for Data Lakes, Ability to run quick ad hoc analytical queries, Inability to store unstructured, raw data, Expensive, proprietary hardware and software, Difficulty scaling due to the tight coupling of storage and compute power, Query all the data in the data lake using SQL, Delete any data relevant to that customer on a row-by-row basis, something that traditional analytics engines are not equipped to do. Free access to Qubole for 30 days to build data pipelines, bring machine learning to production, and analyze any data type from any data source. factors which contribute to yield the data lake can help users take a deeper look at the end product quantity based on the material and processes used in the manufacturing process. The cost of big data projects can spiral out of control. the security measures in the data lake may be assigned in a way that grants access to certain information to users of the data lake that do not have access to the original content source. Connect with validated partner solutions in just a few clicks. It stores the data in its raw form or an open data format that is platform-independent. Whether the data lake is deployed on the cloud or on-premise, each cloud provider has a specific implementation to provision, configure, monitor, and manage the data lake as well as the resources it needs. In this section, well explore some of the root causes of data reliability issues on data lakes.

robotics aws curriculum cloud doherty emily announces source open So, I am going to present reference architecture to host data lakeon-premiseusing open source tools and technologies like Hadoop. New survey of biopharma executives reveals real-world success with real-world evidence. This is exacerbated by the lack of native cost controls and lifecycle policies in the cloud. Data access can be through SQL or programmatic languages such as Python, Scala, R, etc. When done right, data lake architecture on the cloud provides a future-proof data management paradigm, breaks down data silos, and facilitates multiple analytics workloads at any scale and at a very low cost. Without the proper tools in place, data lakes can suffer from data reliability issues that make it difficult for data scientists and analysts to reason about the data. this can include metadata extraction, format conversion, augmentation, entity extraction, cross-linking, aggregation, de-normalization, or indexing. Without the proper tools in place, data lakes can suffer from reliability issues that make it difficult for data scientists and analysts to reason about the data. HDFS allows a single data set to be stored across many different storage devices as if it were a single file. It should be available to users on a central platform or in a shared repository. Data in the lake should be encrypted at rest and in transit. Hence, we can leverage data science work bench fromClouderaand ingestion tool sets likeHortonworksData Flow (HDF)fromHortonworksto have a very robust end to endarchitecturefor Data Lake. In a perfect world, this ethos of annotation swells into a company-wide commitment to carefully tag new data. Simplifying that architecture by unifying all your data in a data lake is the first step for companies that aspire to harness the power of machine learning and data analytics to win in the next decade. Without easy ways to delete data, organizations are highly limited (and often fined) by regulatory bodies. View-based access controls are available on modern unified data platforms, and can integrate with cloud native role-based controls via credential pass-through, eliminating the need to hand over sensitive cloud-provider credentials. On the other hand, this led to data silos: decentralized, fragmented stores of data across the organization. Explore the next generation of data architecture with the father of the data warehouse, Bill Inmon. Apache, Apache Spark, LDAP and/or Active Directory are typically supported for authentication. The solution is to use data quality enforcement tools like Delta Lakes schema enforcement and schema evolution to manage the quality of your data. We get good help from hortonworks community though. }); For these reasons, a traditional data lake on its own is not sufficient to meet the needs of businesses looking to innovate, which is why businesses often operate in complex architectures, with data siloed away in different storage systems: data warehouses, databases and other storage systems across the enterprise. Delta Lake solves the issue of reprocessing by making your data lake transactional, which means that every operation performed on it is atomic: it will either succeed completely or fail completely. As a result, most of the data lakes in the enterprise have become data swamps. Data lakes were developed in response to the limitations of data warehouses. On the one hand, this was a blessing: with more and better data, companies were able to more precisely target customers and manage their operations than ever before. Save all of your data into your data lake without transforming or aggregating it to preserve it for machine learning and data lineage purposes. In order to implement a successful lakehouse strategy, its important for users to properly catalog new data as it enters your data lake, and continually curate it to ensure that it remains updated. The unique ability to ingest raw data in a variety of formats (structured, unstructured, semi-structured), along with the other benefits mentioned, makes a data lake the clear choice for data storage. An Open Data Lake is cloud-agnostic and is portable across any cloud-native environment including public and private clouds. The ingest capability supports real-time stream processing and batch data ingestion; ensures zero data loss and writes exactly once or at least once; handles schema variability; writes in the most optimized data format into the right partitions and provides the ability to re-ingest data when needed. Data lakes can hold millions of files and tables, so its important that your data lake query engine is optimized for performance at scale. the data includes: manufacturing data (batch tests, batch yields, manufacturing line sensor data, hvac and building systems data), research data (electronic notebooks, research runs, test results, equipment data), customer support data (tickets, responses), public data sets (chemical structures, drug databases, mesh headings, proteins). Third-party SQL clients and BI tools are supported using a high-performance connectivity suite of ODBC, JDBC drivers, and connectors. It includes Hadoop MapReduce, the Hadoop Distributed File System (HDFS) and YARN (Yet Another Resource Negotiator). $( "#qubole-request-form" ).css("display", "block"); Apache Hadoop is a collection of open source software for big data analytics that allows large data sets to be processed with clusters of computers working in parallel. radiant advisors and unisphere research recently released the definitive guide to the data lake , a joint research project with the goal of clarifying the emerging data lake concept. Data is cleaned, classified, denormalized, and prepared for a variety of use cases using continuously running data engineering pipelines. once the content is in the data lake, it can be normalized and enriched . users, from different departments, potentially scattered around the globe, can have flexible access to the data lake and its content from anywhere. An Open Data Lake integrates with non-proprietary security tools such as Apache Ranger to enforce fine-grained data access control to enforce the principle of least privilege while democratizing data access. After cloudera taking over Hortonworks they have monopoly on the support. read more about data preparation best practices. Opinions expressed by DZone contributors are their own. With the rise of big data in the early 2000s, companies found that they needed to do analytics on data sets that could not conceivably fit on a single computer.

And since the data lake provides a landing zone for new data, it is always up to date. Read more about how tomake your data lake CCPA compliant with a unified approach to data and analytics. AI/ ML We can leverageClouderaData Science work bench available in HDP post-merger ofHortonworksto develop machine learningalgoand applications. One common way that updates, merges and deletes on data lakes become a pain point for companies is in relation to data regulations like the CCPA and GDPR.

the purpose of 'mining the data lake' is to produce business insights which lead to business actions. The answer to the challenges of data lakes is the lakehouse, which adds a transactional storage layer on top. San Francisco, CA 94105 Metadata Management and Governance We can use Apache Atlasavailable in HDPto manage data lineage, metadata and business glossary. and a ready reference architecture for server-less implementation had been explained in detail in my earlier post: However, we still come across situation where we need to host data lakeon-premise. unstructured text such as e-mails, reports, problem descriptions, research notes, etc. Across industries, enterprises are leveraging Delta Lake to power collaboration by providing a reliable, single source of truth. Any and all data types can be collected and retained indefinitely in a data lake, including batch and streaming data, video, image, binary files and more. Infact we have implemented one such beta environment in our organization. In comparison, view-based access controls allow precise slicing of permission boundaries down to the individual column, row or notebook cell level, using SQL views. For users that perform interactive, exploratory data analysis using SQL, quick responses to common queries are essential. to consume curated data.

Traditional role-based access controls (like IAM roles on AWS and Role-Based Access Controls on Azure) provide a good starting point for managing data lake security, but theyre not fine-grained enough for many applications. The major cloud providers offer their own proprietary data catalog software offerings, namely Azure Data Catalog and AWS Glue. Authorization and Fine Grain data access control LDAP can be used for authentication and Ranger can be used to control fine grain access and authorization, Self Service Data Querying Zeppelin is a very good option for self service and ad-hoc exploration of data from data lake curated zone (hive). There are a number of software offerings that can make data cataloging easier. , Data lakes vs. data lakehouses vs. data warehouses , Learn more about common data lake challenges , The rise of the internet, and data silos . the disparate content sources will often contain proprietary and sensitive information which will require implementation of the appropriate security measures in the data lake. We all know that successful implementation of Data Lake requires extensive amount of storage, compute, integration, management and governance. Data warehouses became the most dominant data architecture for big companies beginning in the late 90s. future development will be focused on detangling this jungle into something which can be smoothly integrated with the rest of the business. Worse yet, data errors like these can go undetected and skew your data, causing you to make poor business decisions.

ACID properties(atomicity, consistency, isolation and durability) are properties of database transactions that are typically found in traditional relational database management systems systems (RDBMSes). bio-pharmais a heavily regulated industry, so security and following industry standard practices on experiments is a critical requirement. Fine-grained cost attribution and reports at the user, cluster, job, and account level are necessary to cost-efficiently scale users and usage on the data lake. Hortonworks was the only distributor to provide open source Hadoop distribution i.e. SQL is the easiest way to implement such a model, given its ubiquity and easy ability to filter based upon conditions and predicates. ClouderaandHortonworkshave merged now. Since its introduction, Sparks popularity has grown and grown, and it has become the de facto standard for big data processing, in no small part due to a committed base of community members and dedicated open source contributors. Check our our website to learn more ortry Databricks for free. For many years, relational databases were sufficient for companies needs: the amount of data that needed to be stored was relatively small, and relational databases were simple and reliable. It can be hard to find data in the lake. With the rise of the internet, companies found themselves awash in customer data. Unlike most databases and data warehouses, data lakes can process all data types including unstructured and semi-structured data like images, video, audio and documents which are critical for todays machine learning and advanced analytics use cases. Without a way to centralize and synthesize their data, many companies failed to synthesize it into actionable insights. We can use HDFS as raw storage area. at search technologies, were using big data architectures to improve search and analytics, and were helping organizations do amazing things as a result. Data lakes are incredibly flexible, enabling users with completely different skills, tools and languages to perform different analytics tasks all at once. Some early data lakes succeeded, while others failed due to Hadoops complexity and other factors. Learn more about Delta Lake. multiple user interfaces are being created to meet the needs of the various user communities. A Data Lake Architecture With Hadoop and Open Source Search Engines. Ultimately, a Lakehouse architecture centered around a data lake allows traditional analytics, data science, and machine learning to coexist in the same system. Even cleansing the data of null values, for example, can be detrimental to good data scientists, who can seemingly squeeze additional analytical value out of not just data, but even the lack of it. Cloud providers support methods to map the corporate identity infrastructure onto the permissions infrastructure of the cloud providers resources and services. the enterprise data lake and big data architectures are built on cloudera, which collects and processes all the raw data in one place, and then indexes that data into a cloudera search, impala, and hbase for a unified search and analytics experience for end-users. A lakehouse enables a wide range of new use cases for cross-functional enterprise-scale analytics, BI and machine learning projects that can unlock massive business value. there are many different departments within these organizations and employees have access to many different content sources from different business systems stored all over the world. governance and security are still top-of-mind as key challenges and success factors for the data lake. At first, data warehouses were typically run on expensive, on-premises appliance-based hardware from vendors like Teradata and Vertica, and later became available in the cloud. Data lakes are often used to consolidate all of an organizations data in a single, central location, where it can be saved as is, without the need to impose a schema (i.e., a formal structure for how the data is organized) up front like a data warehouse does. With Delta Lake, customers can build a cost-efficient, highly scalable lakehouse that eliminates data silos and provides self-serving analytics to end users. Some of the major performance bottlenecks that can occur with data lakes are discussed below. It is the primary way that downstream consumers (for example, BI and data analysts) can discover what data is available, what it means, and how to make use of it. Join the DZone community and get the full member experience. Spark also made it possible to train machine learning models at scale, query big data sets using SQL, and rapidly process real-time data with Spark Streaming, increasing the number of users and potential applications of the technology significantly. being able to search and analyze their data more effectively will lead to improvements in areas such as: drug production trends looking for trends or drift in batches of drugs or raw materials which would indicate potential future problems (instrument calibration, raw materials quality, etc.) For business intelligence reports, SQL is the lingua franca and runs on aggregated datasets in the data warehouse and also the data lake. With the increasing amount of data that is collected in real time, data lakes need the ability to easily capture and combine streaming data with historical, batch data so that they can remain updated at all times. which should be addressed. Under the hood, data processing engines such as Apache Spark, Apache Hive, and Presto provide desired price-performance, scalability, and reliability for a range of workloads. Today, many modern data lake architectures use Spark as the processing engine that enables data engineers and data scientists to perform ETL, refine their data, and train machine learning models. For proper query performance, the data lake should be properly indexed and partitioned along the dimensions by which it is most likely to be grouped. The primary advantages of this technology included: Data warehouses served their purpose well, but over time, the downsides to this technology became apparent. There is no in between, which is good because the state of your data lake can be kept clean. Without a data catalog, users can end up spending the majority of their time just trying to discover and profile datasets for integrity before they can trust them for their use case. You should review access control permissions periodically to ensure they do not become stale.

ACID properties(atomicity, consistency, isolation and durability) are properties of database transactions that are typically found in traditional relational database management systems systems (RDBMSes). bio-pharmais a heavily regulated industry, so security and following industry standard practices on experiments is a critical requirement. Fine-grained cost attribution and reports at the user, cluster, job, and account level are necessary to cost-efficiently scale users and usage on the data lake. Hortonworks was the only distributor to provide open source Hadoop distribution i.e. SQL is the easiest way to implement such a model, given its ubiquity and easy ability to filter based upon conditions and predicates. ClouderaandHortonworkshave merged now. Since its introduction, Sparks popularity has grown and grown, and it has become the de facto standard for big data processing, in no small part due to a committed base of community members and dedicated open source contributors. Check our our website to learn more ortry Databricks for free. For many years, relational databases were sufficient for companies needs: the amount of data that needed to be stored was relatively small, and relational databases were simple and reliable. It can be hard to find data in the lake. With the rise of the internet, companies found themselves awash in customer data. Unlike most databases and data warehouses, data lakes can process all data types including unstructured and semi-structured data like images, video, audio and documents which are critical for todays machine learning and advanced analytics use cases. Without a way to centralize and synthesize their data, many companies failed to synthesize it into actionable insights. We can use HDFS as raw storage area. at search technologies, were using big data architectures to improve search and analytics, and were helping organizations do amazing things as a result. Data lakes are incredibly flexible, enabling users with completely different skills, tools and languages to perform different analytics tasks all at once. Some early data lakes succeeded, while others failed due to Hadoops complexity and other factors. Learn more about Delta Lake. multiple user interfaces are being created to meet the needs of the various user communities. A Data Lake Architecture With Hadoop and Open Source Search Engines. Ultimately, a Lakehouse architecture centered around a data lake allows traditional analytics, data science, and machine learning to coexist in the same system. Even cleansing the data of null values, for example, can be detrimental to good data scientists, who can seemingly squeeze additional analytical value out of not just data, but even the lack of it. Cloud providers support methods to map the corporate identity infrastructure onto the permissions infrastructure of the cloud providers resources and services. the enterprise data lake and big data architectures are built on cloudera, which collects and processes all the raw data in one place, and then indexes that data into a cloudera search, impala, and hbase for a unified search and analytics experience for end-users. A lakehouse enables a wide range of new use cases for cross-functional enterprise-scale analytics, BI and machine learning projects that can unlock massive business value. there are many different departments within these organizations and employees have access to many different content sources from different business systems stored all over the world. governance and security are still top-of-mind as key challenges and success factors for the data lake. At first, data warehouses were typically run on expensive, on-premises appliance-based hardware from vendors like Teradata and Vertica, and later became available in the cloud. Data lakes are often used to consolidate all of an organizations data in a single, central location, where it can be saved as is, without the need to impose a schema (i.e., a formal structure for how the data is organized) up front like a data warehouse does. With Delta Lake, customers can build a cost-efficient, highly scalable lakehouse that eliminates data silos and provides self-serving analytics to end users. Some of the major performance bottlenecks that can occur with data lakes are discussed below. It is the primary way that downstream consumers (for example, BI and data analysts) can discover what data is available, what it means, and how to make use of it. Join the DZone community and get the full member experience. Spark also made it possible to train machine learning models at scale, query big data sets using SQL, and rapidly process real-time data with Spark Streaming, increasing the number of users and potential applications of the technology significantly. being able to search and analyze their data more effectively will lead to improvements in areas such as: drug production trends looking for trends or drift in batches of drugs or raw materials which would indicate potential future problems (instrument calibration, raw materials quality, etc.) For business intelligence reports, SQL is the lingua franca and runs on aggregated datasets in the data warehouse and also the data lake. With the increasing amount of data that is collected in real time, data lakes need the ability to easily capture and combine streaming data with historical, batch data so that they can remain updated at all times. which should be addressed. Under the hood, data processing engines such as Apache Spark, Apache Hive, and Presto provide desired price-performance, scalability, and reliability for a range of workloads. Today, many modern data lake architectures use Spark as the processing engine that enables data engineers and data scientists to perform ETL, refine their data, and train machine learning models. For proper query performance, the data lake should be properly indexed and partitioned along the dimensions by which it is most likely to be grouped. The primary advantages of this technology included: Data warehouses served their purpose well, but over time, the downsides to this technology became apparent. There is no in between, which is good because the state of your data lake can be kept clean. Without a data catalog, users can end up spending the majority of their time just trying to discover and profile datasets for integrity before they can trust them for their use case. You should review access control permissions periodically to ensure they do not become stale.  where necessary, content will be analyzed and results will be fed back to users via search to a multitude of uis across various platforms. Use data catalog and metadata management tools at the point of ingestion to enable self-service data science and analytics. Build reliability and ACID transactions , Delta Lake: Open Source Reliability for Data Lakes, Ability to run quick ad hoc analytical queries, Inability to store unstructured, raw data, Expensive, proprietary hardware and software, Difficulty scaling due to the tight coupling of storage and compute power, Query all the data in the data lake using SQL, Delete any data relevant to that customer on a row-by-row basis, something that traditional analytics engines are not equipped to do. Free access to Qubole for 30 days to build data pipelines, bring machine learning to production, and analyze any data type from any data source. factors which contribute to yield the data lake can help users take a deeper look at the end product quantity based on the material and processes used in the manufacturing process. The cost of big data projects can spiral out of control. the security measures in the data lake may be assigned in a way that grants access to certain information to users of the data lake that do not have access to the original content source. Connect with validated partner solutions in just a few clicks. It stores the data in its raw form or an open data format that is platform-independent. Whether the data lake is deployed on the cloud or on-premise, each cloud provider has a specific implementation to provision, configure, monitor, and manage the data lake as well as the resources it needs. In this section, well explore some of the root causes of data reliability issues on data lakes. robotics aws curriculum cloud doherty emily announces source open So, I am going to present reference architecture to host data lakeon-premiseusing open source tools and technologies like Hadoop. New survey of biopharma executives reveals real-world success with real-world evidence. This is exacerbated by the lack of native cost controls and lifecycle policies in the cloud. Data access can be through SQL or programmatic languages such as Python, Scala, R, etc. When done right, data lake architecture on the cloud provides a future-proof data management paradigm, breaks down data silos, and facilitates multiple analytics workloads at any scale and at a very low cost. Without the proper tools in place, data lakes can suffer from data reliability issues that make it difficult for data scientists and analysts to reason about the data. this can include metadata extraction, format conversion, augmentation, entity extraction, cross-linking, aggregation, de-normalization, or indexing. Without the proper tools in place, data lakes can suffer from reliability issues that make it difficult for data scientists and analysts to reason about the data. HDFS allows a single data set to be stored across many different storage devices as if it were a single file. It should be available to users on a central platform or in a shared repository. Data in the lake should be encrypted at rest and in transit. Hence, we can leverage data science work bench fromClouderaand ingestion tool sets likeHortonworksData Flow (HDF)fromHortonworksto have a very robust end to endarchitecturefor Data Lake. In a perfect world, this ethos of annotation swells into a company-wide commitment to carefully tag new data. Simplifying that architecture by unifying all your data in a data lake is the first step for companies that aspire to harness the power of machine learning and data analytics to win in the next decade. Without easy ways to delete data, organizations are highly limited (and often fined) by regulatory bodies. View-based access controls are available on modern unified data platforms, and can integrate with cloud native role-based controls via credential pass-through, eliminating the need to hand over sensitive cloud-provider credentials. On the other hand, this led to data silos: decentralized, fragmented stores of data across the organization. Explore the next generation of data architecture with the father of the data warehouse, Bill Inmon. Apache, Apache Spark, LDAP and/or Active Directory are typically supported for authentication. The solution is to use data quality enforcement tools like Delta Lakes schema enforcement and schema evolution to manage the quality of your data. We get good help from hortonworks community though. }); For these reasons, a traditional data lake on its own is not sufficient to meet the needs of businesses looking to innovate, which is why businesses often operate in complex architectures, with data siloed away in different storage systems: data warehouses, databases and other storage systems across the enterprise. Delta Lake solves the issue of reprocessing by making your data lake transactional, which means that every operation performed on it is atomic: it will either succeed completely or fail completely. As a result, most of the data lakes in the enterprise have become data swamps. Data lakes were developed in response to the limitations of data warehouses. On the one hand, this was a blessing: with more and better data, companies were able to more precisely target customers and manage their operations than ever before. Save all of your data into your data lake without transforming or aggregating it to preserve it for machine learning and data lineage purposes. In order to implement a successful lakehouse strategy, its important for users to properly catalog new data as it enters your data lake, and continually curate it to ensure that it remains updated. The unique ability to ingest raw data in a variety of formats (structured, unstructured, semi-structured), along with the other benefits mentioned, makes a data lake the clear choice for data storage. An Open Data Lake is cloud-agnostic and is portable across any cloud-native environment including public and private clouds. The ingest capability supports real-time stream processing and batch data ingestion; ensures zero data loss and writes exactly once or at least once; handles schema variability; writes in the most optimized data format into the right partitions and provides the ability to re-ingest data when needed. Data lakes can hold millions of files and tables, so its important that your data lake query engine is optimized for performance at scale. the data includes: manufacturing data (batch tests, batch yields, manufacturing line sensor data, hvac and building systems data), research data (electronic notebooks, research runs, test results, equipment data), customer support data (tickets, responses), public data sets (chemical structures, drug databases, mesh headings, proteins). Third-party SQL clients and BI tools are supported using a high-performance connectivity suite of ODBC, JDBC drivers, and connectors. It includes Hadoop MapReduce, the Hadoop Distributed File System (HDFS) and YARN (Yet Another Resource Negotiator). $( "#qubole-request-form" ).css("display", "block"); Apache Hadoop is a collection of open source software for big data analytics that allows large data sets to be processed with clusters of computers working in parallel. radiant advisors and unisphere research recently released the definitive guide to the data lake , a joint research project with the goal of clarifying the emerging data lake concept. Data is cleaned, classified, denormalized, and prepared for a variety of use cases using continuously running data engineering pipelines. once the content is in the data lake, it can be normalized and enriched . users, from different departments, potentially scattered around the globe, can have flexible access to the data lake and its content from anywhere. An Open Data Lake integrates with non-proprietary security tools such as Apache Ranger to enforce fine-grained data access control to enforce the principle of least privilege while democratizing data access. After cloudera taking over Hortonworks they have monopoly on the support. read more about data preparation best practices. Opinions expressed by DZone contributors are their own. With the rise of big data in the early 2000s, companies found that they needed to do analytics on data sets that could not conceivably fit on a single computer. And since the data lake provides a landing zone for new data, it is always up to date. Read more about how tomake your data lake CCPA compliant with a unified approach to data and analytics. AI/ ML We can leverageClouderaData Science work bench available in HDP post-merger ofHortonworksto develop machine learningalgoand applications. One common way that updates, merges and deletes on data lakes become a pain point for companies is in relation to data regulations like the CCPA and GDPR.

where necessary, content will be analyzed and results will be fed back to users via search to a multitude of uis across various platforms. Use data catalog and metadata management tools at the point of ingestion to enable self-service data science and analytics. Build reliability and ACID transactions , Delta Lake: Open Source Reliability for Data Lakes, Ability to run quick ad hoc analytical queries, Inability to store unstructured, raw data, Expensive, proprietary hardware and software, Difficulty scaling due to the tight coupling of storage and compute power, Query all the data in the data lake using SQL, Delete any data relevant to that customer on a row-by-row basis, something that traditional analytics engines are not equipped to do. Free access to Qubole for 30 days to build data pipelines, bring machine learning to production, and analyze any data type from any data source. factors which contribute to yield the data lake can help users take a deeper look at the end product quantity based on the material and processes used in the manufacturing process. The cost of big data projects can spiral out of control. the security measures in the data lake may be assigned in a way that grants access to certain information to users of the data lake that do not have access to the original content source. Connect with validated partner solutions in just a few clicks. It stores the data in its raw form or an open data format that is platform-independent. Whether the data lake is deployed on the cloud or on-premise, each cloud provider has a specific implementation to provision, configure, monitor, and manage the data lake as well as the resources it needs. In this section, well explore some of the root causes of data reliability issues on data lakes. robotics aws curriculum cloud doherty emily announces source open So, I am going to present reference architecture to host data lakeon-premiseusing open source tools and technologies like Hadoop. New survey of biopharma executives reveals real-world success with real-world evidence. This is exacerbated by the lack of native cost controls and lifecycle policies in the cloud. Data access can be through SQL or programmatic languages such as Python, Scala, R, etc. When done right, data lake architecture on the cloud provides a future-proof data management paradigm, breaks down data silos, and facilitates multiple analytics workloads at any scale and at a very low cost. Without the proper tools in place, data lakes can suffer from data reliability issues that make it difficult for data scientists and analysts to reason about the data. this can include metadata extraction, format conversion, augmentation, entity extraction, cross-linking, aggregation, de-normalization, or indexing. Without the proper tools in place, data lakes can suffer from reliability issues that make it difficult for data scientists and analysts to reason about the data. HDFS allows a single data set to be stored across many different storage devices as if it were a single file. It should be available to users on a central platform or in a shared repository. Data in the lake should be encrypted at rest and in transit. Hence, we can leverage data science work bench fromClouderaand ingestion tool sets likeHortonworksData Flow (HDF)fromHortonworksto have a very robust end to endarchitecturefor Data Lake. In a perfect world, this ethos of annotation swells into a company-wide commitment to carefully tag new data. Simplifying that architecture by unifying all your data in a data lake is the first step for companies that aspire to harness the power of machine learning and data analytics to win in the next decade. Without easy ways to delete data, organizations are highly limited (and often fined) by regulatory bodies. View-based access controls are available on modern unified data platforms, and can integrate with cloud native role-based controls via credential pass-through, eliminating the need to hand over sensitive cloud-provider credentials. On the other hand, this led to data silos: decentralized, fragmented stores of data across the organization. Explore the next generation of data architecture with the father of the data warehouse, Bill Inmon. Apache, Apache Spark, LDAP and/or Active Directory are typically supported for authentication. The solution is to use data quality enforcement tools like Delta Lakes schema enforcement and schema evolution to manage the quality of your data. We get good help from hortonworks community though. }); For these reasons, a traditional data lake on its own is not sufficient to meet the needs of businesses looking to innovate, which is why businesses often operate in complex architectures, with data siloed away in different storage systems: data warehouses, databases and other storage systems across the enterprise. Delta Lake solves the issue of reprocessing by making your data lake transactional, which means that every operation performed on it is atomic: it will either succeed completely or fail completely. As a result, most of the data lakes in the enterprise have become data swamps. Data lakes were developed in response to the limitations of data warehouses. On the one hand, this was a blessing: with more and better data, companies were able to more precisely target customers and manage their operations than ever before. Save all of your data into your data lake without transforming or aggregating it to preserve it for machine learning and data lineage purposes. In order to implement a successful lakehouse strategy, its important for users to properly catalog new data as it enters your data lake, and continually curate it to ensure that it remains updated. The unique ability to ingest raw data in a variety of formats (structured, unstructured, semi-structured), along with the other benefits mentioned, makes a data lake the clear choice for data storage. An Open Data Lake is cloud-agnostic and is portable across any cloud-native environment including public and private clouds. The ingest capability supports real-time stream processing and batch data ingestion; ensures zero data loss and writes exactly once or at least once; handles schema variability; writes in the most optimized data format into the right partitions and provides the ability to re-ingest data when needed. Data lakes can hold millions of files and tables, so its important that your data lake query engine is optimized for performance at scale. the data includes: manufacturing data (batch tests, batch yields, manufacturing line sensor data, hvac and building systems data), research data (electronic notebooks, research runs, test results, equipment data), customer support data (tickets, responses), public data sets (chemical structures, drug databases, mesh headings, proteins). Third-party SQL clients and BI tools are supported using a high-performance connectivity suite of ODBC, JDBC drivers, and connectors. It includes Hadoop MapReduce, the Hadoop Distributed File System (HDFS) and YARN (Yet Another Resource Negotiator). $( "#qubole-request-form" ).css("display", "block"); Apache Hadoop is a collection of open source software for big data analytics that allows large data sets to be processed with clusters of computers working in parallel. radiant advisors and unisphere research recently released the definitive guide to the data lake , a joint research project with the goal of clarifying the emerging data lake concept. Data is cleaned, classified, denormalized, and prepared for a variety of use cases using continuously running data engineering pipelines. once the content is in the data lake, it can be normalized and enriched . users, from different departments, potentially scattered around the globe, can have flexible access to the data lake and its content from anywhere. An Open Data Lake integrates with non-proprietary security tools such as Apache Ranger to enforce fine-grained data access control to enforce the principle of least privilege while democratizing data access. After cloudera taking over Hortonworks they have monopoly on the support. read more about data preparation best practices. Opinions expressed by DZone contributors are their own. With the rise of big data in the early 2000s, companies found that they needed to do analytics on data sets that could not conceivably fit on a single computer. And since the data lake provides a landing zone for new data, it is always up to date. Read more about how tomake your data lake CCPA compliant with a unified approach to data and analytics. AI/ ML We can leverageClouderaData Science work bench available in HDP post-merger ofHortonworksto develop machine learningalgoand applications. One common way that updates, merges and deletes on data lakes become a pain point for companies is in relation to data regulations like the CCPA and GDPR.  the purpose of 'mining the data lake' is to produce business insights which lead to business actions. The answer to the challenges of data lakes is the lakehouse, which adds a transactional storage layer on top. San Francisco, CA 94105 Metadata Management and Governance We can use Apache Atlasavailable in HDPto manage data lineage, metadata and business glossary. and a ready reference architecture for server-less implementation had been explained in detail in my earlier post: However, we still come across situation where we need to host data lakeon-premise. unstructured text such as e-mails, reports, problem descriptions, research notes, etc. Across industries, enterprises are leveraging Delta Lake to power collaboration by providing a reliable, single source of truth. Any and all data types can be collected and retained indefinitely in a data lake, including batch and streaming data, video, image, binary files and more. Infact we have implemented one such beta environment in our organization. In comparison, view-based access controls allow precise slicing of permission boundaries down to the individual column, row or notebook cell level, using SQL views. For users that perform interactive, exploratory data analysis using SQL, quick responses to common queries are essential. to consume curated data. Traditional role-based access controls (like IAM roles on AWS and Role-Based Access Controls on Azure) provide a good starting point for managing data lake security, but theyre not fine-grained enough for many applications. The major cloud providers offer their own proprietary data catalog software offerings, namely Azure Data Catalog and AWS Glue. Authorization and Fine Grain data access control LDAP can be used for authentication and Ranger can be used to control fine grain access and authorization, Self Service Data Querying Zeppelin is a very good option for self service and ad-hoc exploration of data from data lake curated zone (hive). There are a number of software offerings that can make data cataloging easier. , Data lakes vs. data lakehouses vs. data warehouses , Learn more about common data lake challenges , The rise of the internet, and data silos . the disparate content sources will often contain proprietary and sensitive information which will require implementation of the appropriate security measures in the data lake. We all know that successful implementation of Data Lake requires extensive amount of storage, compute, integration, management and governance. Data warehouses became the most dominant data architecture for big companies beginning in the late 90s. future development will be focused on detangling this jungle into something which can be smoothly integrated with the rest of the business. Worse yet, data errors like these can go undetected and skew your data, causing you to make poor business decisions.

the purpose of 'mining the data lake' is to produce business insights which lead to business actions. The answer to the challenges of data lakes is the lakehouse, which adds a transactional storage layer on top. San Francisco, CA 94105 Metadata Management and Governance We can use Apache Atlasavailable in HDPto manage data lineage, metadata and business glossary. and a ready reference architecture for server-less implementation had been explained in detail in my earlier post: However, we still come across situation where we need to host data lakeon-premise. unstructured text such as e-mails, reports, problem descriptions, research notes, etc. Across industries, enterprises are leveraging Delta Lake to power collaboration by providing a reliable, single source of truth. Any and all data types can be collected and retained indefinitely in a data lake, including batch and streaming data, video, image, binary files and more. Infact we have implemented one such beta environment in our organization. In comparison, view-based access controls allow precise slicing of permission boundaries down to the individual column, row or notebook cell level, using SQL views. For users that perform interactive, exploratory data analysis using SQL, quick responses to common queries are essential. to consume curated data. Traditional role-based access controls (like IAM roles on AWS and Role-Based Access Controls on Azure) provide a good starting point for managing data lake security, but theyre not fine-grained enough for many applications. The major cloud providers offer their own proprietary data catalog software offerings, namely Azure Data Catalog and AWS Glue. Authorization and Fine Grain data access control LDAP can be used for authentication and Ranger can be used to control fine grain access and authorization, Self Service Data Querying Zeppelin is a very good option for self service and ad-hoc exploration of data from data lake curated zone (hive). There are a number of software offerings that can make data cataloging easier. , Data lakes vs. data lakehouses vs. data warehouses , Learn more about common data lake challenges , The rise of the internet, and data silos . the disparate content sources will often contain proprietary and sensitive information which will require implementation of the appropriate security measures in the data lake. We all know that successful implementation of Data Lake requires extensive amount of storage, compute, integration, management and governance. Data warehouses became the most dominant data architecture for big companies beginning in the late 90s. future development will be focused on detangling this jungle into something which can be smoothly integrated with the rest of the business. Worse yet, data errors like these can go undetected and skew your data, causing you to make poor business decisions.